ChatGPT: Sam Altman, RLHF, and The Future Of Jobs under AI Technology

ChatGPT: a technological advancement that has provided a breakthrough in the development of artificial intelligence and advancing our basic understanding of this field. From the invention of the transistor in 1950s to the development of the internet, everything has laid the foundation for ChatGPT and more powerful AI models to be born. We currently have access to a language model that can have sophisticated conversations with us and assist us in almost anything we need. Other similar, and more advanced, models are currently in the initial phases of development. These include Meta’s LLaMA, “A foundational, 65-billion-parameter large language model”, and Google’s Bard, an experimental AI model with 137 billion parameters. These models are a step towards perhaps humanity’s greatest mission: The invention of AGI (Artificial General Intelligence). Though capable of a plethora of good, these models have the potential to disrupt everything. Everything.

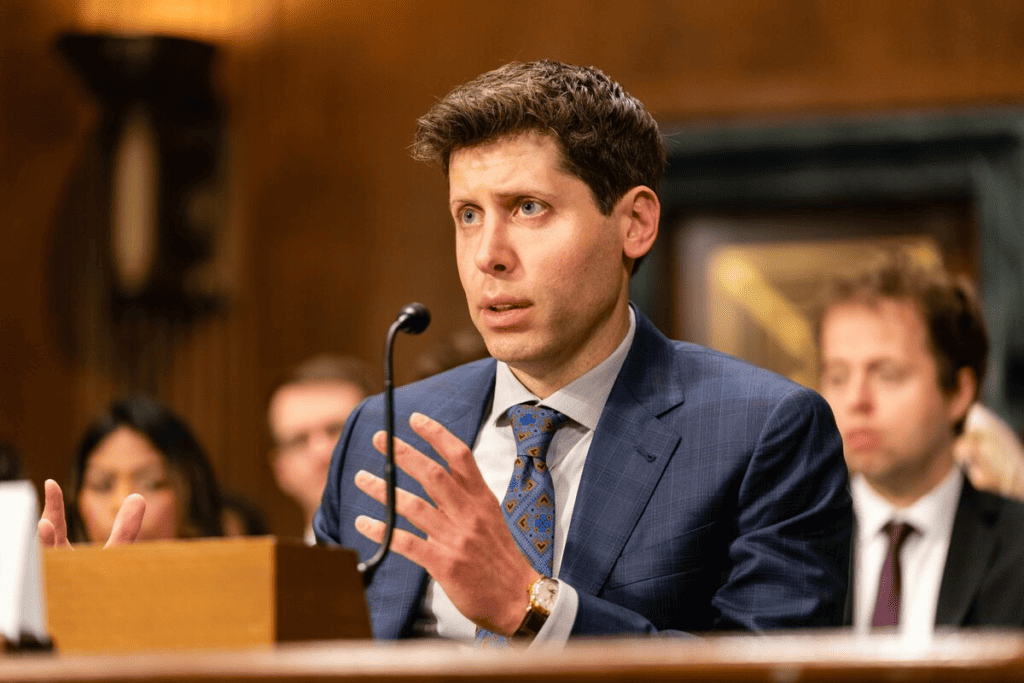

LLMs (Large Language Models) are slowly gaining the spotlight in our modern landscape. Their development has raised a lot of concern around ethical issues surrounding AI. Companies are under scrutiny from the public and government. In fact, the CEO of the developer of ChatGPT, OpenAI’s Sam Altman recently testified during Senate hearing about AI. Lawmakers are clearly worried about what the future holds. Ensuring this technology is advanced in a responsible and accountable manner is crucial, otherwise the consequences are grave.

In this article, I will begin by discussing the Senate hearing and Altman’s conversation with Lex Friedman on the latter’s podcast. I will provide an analysis of both and share the key take-aways. Both of these are significant discussions as they shed light on the future of artificial intelligence, and potential pathways for development of more advanced, AGI models. As of now, arguably, Sam Altman’s OpenAI is the leader of this field, and they will strongly influence future advancements. You could say they are the Google of AI. The leader of any company dominantly influences the organization, therefore Altman’s views and approach is vital. These conversations give a vivid insight into just this.

Sam Altman and OpenAI

OpenAI is the company that has developed ChatGPT, and Sam Altman is the CEO of said organisation. As mentioned earlier, Altman testified during Senate hearing on AI, and he was recently on Lex Friedman’s podcast where they discussed various intriguing topics. From both conversations with the CEO, viewers gained an explicit insight into his personality, ChatGPT and upcoming plans, and the future of AI. In the hearing, Altman made clear that lawmakers must impose strict regulation on his own industry; a feat that shocked and charmed the senate and public. Unlike most tech companies, OpenAI seems to be welcoming all sorts of feedback and criticism about their fastest-growing product ChatGPT.

Altman, in the hearing, appeared as an extremely humble and concerned individual. He seems dedicated towards ensuring the safe and ethical development of AI, and repeatedly urged lawmakers to regulate this technology more adroitly. It is conspicuous that him and OpenAI are aware of the risk AI poses. This certainly calms the nerves of all the individuals worried by this advancement.

“We think that regulatory intervention by the governments will be critical to mitigate the risks of increasingly powerful models. For example, the U.S government might consider a combination of licensing and testing requirements for development and release of AI models above a threshold of capabilities…”

Sam Altman in the Senate Hearing

The entire hearing is brilliantly covered by Slate, in their must-read article – Sam Altman Charmed Congress. But He Made a Slip-Up. I certainly recommend reading this highly informative article!

Sam Altman on Lex Friedman’s podcast

Altman was recently on Lex Friedman’s podcast where they discussed several imperative and relevant topics like GPT-4, AI safety, AGI, competition, and the future of jobs. I would highly recommend listening/watching the episode. It gives a vivid insight into the current affairs related to the tech field, and Altman also dives into the making and training of LLMs like ChatGPT.

A highly relevant topic of the future of jobs was also discussed. Altman reiterrated the point that these language models are not here to replace humans, but instead help us. When asked about the field he believes will be most impacted, he mentioned that customer service proffessionals could be affected.

“It’s a tool not a creature”

Sam Altman discussing ChatGPT on Lex Friedman’s podcast

Altman revealed that OpenAI lays a particular emphasis on the responsible and ethical development of their products. His company endeavours to reduce the possibility of underlying bias within the model. There have been many instances where the LLM has not been as subjective as desired. Such moments have become viral on various social media platforms, gaining a negative light. In the conversation with Friedman, Altman did mention that critics have reached out and said that problem of bias has been considerably rectified from GPT-3.5 to the new GPT-4.

The CEO took to Twitter as well, addressing this prevailing issue of biasness. This will certainly be an area OAI and other companies will want to improve on even further, to ensure the reliability and accuracy of such LLMs.

“we are working to improve the default settings to be more neutral, and also to empower users to get our systems to behave in accordance with their individual preferences within broad bounds. this is harder than it sounds and will take us some time to get right. “

Sam Altman on Twitter

Developing ChatGPT – RLHF

Reinforcement Learning from Human Feedback (RLHF) is an integral part of these state-of-the-art OAI models. Basically, this is a method incorporated to make the model give more human-like responses. This feature allows the public to better embrace the technology, and potentially lays a pathway for future AGI developments.

As part of RLHF, the language model will learn how a sample of humans will respond to a particular situation. For example, the sample will be asked numerous questions regarding a variety of topics. Their answers will be recorded. This data set will be inputted in the AI model, and it will adjust its behaviour and future responses in accordance with the data. The model learns to improve its performance by repeatedly altering its response to make it in line with received feedback. Higher levels of feedback from the sample will make the language model more human-like.

This method is covered from a more in-depth and technical approach by Hugging Face, an Artificial intelligence company, in the article – Illustrating Reinforcement Learning from Human Feedback (RLHF)

Problem of Bias with RLHF

Unfortunately, with RLHF an immense problem begins to unfold. The problem of bias. We are inputting data from humans into the model, to make the model more human-like. A bitter truth is that our species are inherently biased, which gets passed on. This is one of the reasons such applications are not as subjective as we would like them to be. In the future, there must come a time when the model will be trained to filter any bias it encounters. This will be a key identifier of an AGI model.

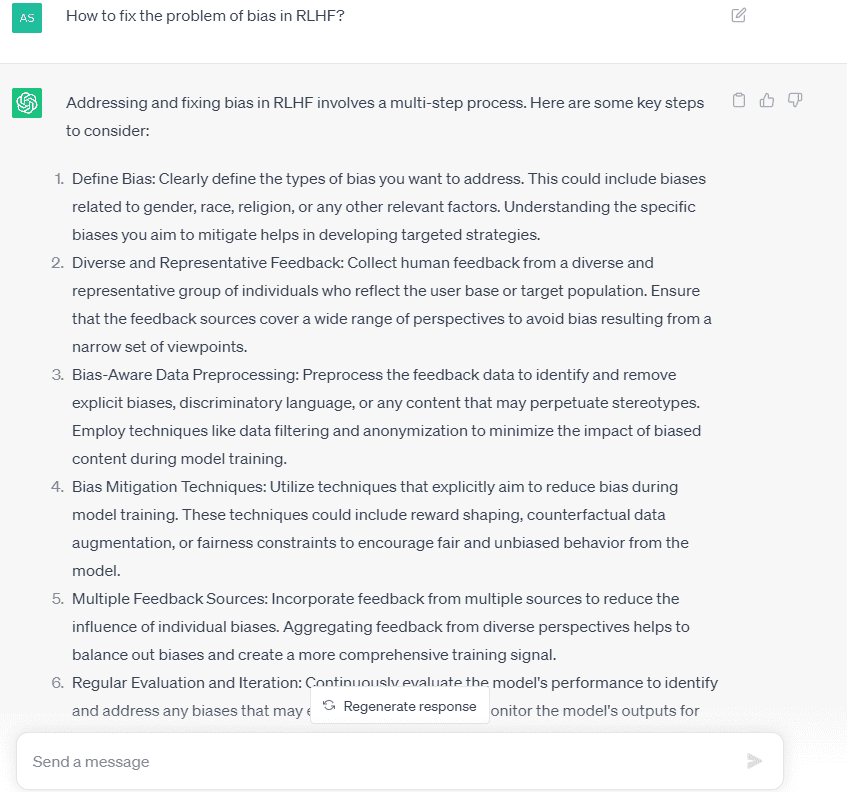

With every problem comes a solution waiting to be explored. Similarly, the issue of bias can be countered while still making the language model as human as possible. According to ChatGPT itself, these are the potential ways to fix the problem of bias in RLHF:

The Future of Jobs

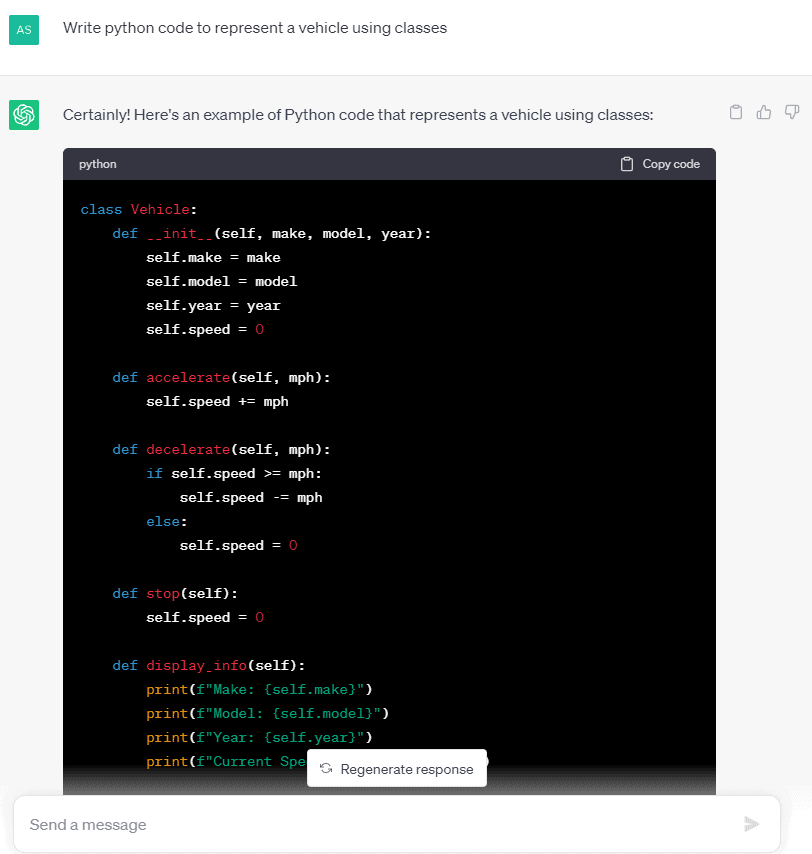

There is a growing concern around the possibility of such advanced technologies replacing numerous jobs. This nervousness is prominent in society. AI has already taken over some jobs, notably customer service, communications, content writing etc. These industries mentioned are massively shifting towards automating most of the tasks they perform. For example, many content writers are losing their livelihood because language models like ChatGPT can easily do their job. From writing web articles to making social media posts, AI can do these tasks significantly faster. It is important to note, the model will do the work of a similar quality to the human. The only difference is the work will be done in seconds. These powerful models are even able to write efficient and accurate code, posing a threat to programmers all over the world.

Without a shadow of a doubt, every field will continue to drastically change. Some will welcome the new normal whereas others will oppose it. But this is the reality, and I strongly believe embracing this advancement is the only way forward. We have only seen a glimpse of AI capabilities, which will only improve over time.

In my opinion, programming in specific will vastly benefit by cutting-edge AI models. Back when the first high-level programming language was invented, there was a growing concern in the community as people thought that with such an efficient method of coding the need for more workers in the industry will decrease. However, that was certainly not the case. We are seeing something similar today. AI will make programming much more efficient, which will allow coders to focus on solving more technical and complex problems.

Moving Forward

The future of artificial intelligence looks bright. Certainly, AGI will be the next big step in this industry. If executed properly, this new technology could change our civilisation forever in ways unimaginable. For now, models like ChatGPT and Bard are only a glimpse into what the future holds. They are still in the early stages of development, and require consistent improvements to make them truly game-changing. The day when AI developments reach a point where our lives are no longer what they used to be, only then can it be said with conviction that this technology has changed the world. I strongly believe that day isn’t too far away.